Pytorch

basato sulla documentazione https://www.learnpytorch.io/

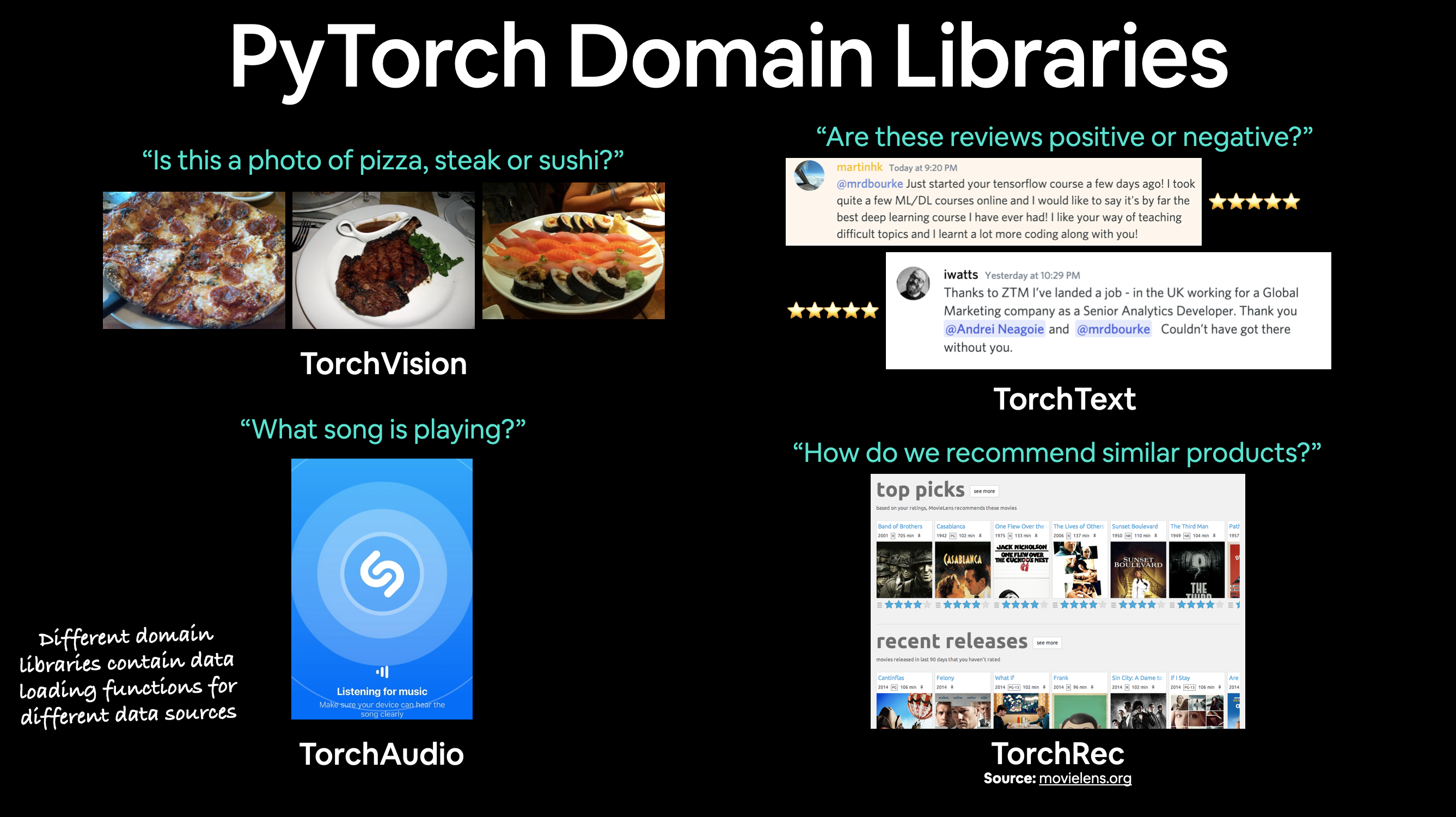

- Introduzione

- Tensore

- Workflow + regressione lineare

- Classificazione (binary classification)

- Multiclass classification

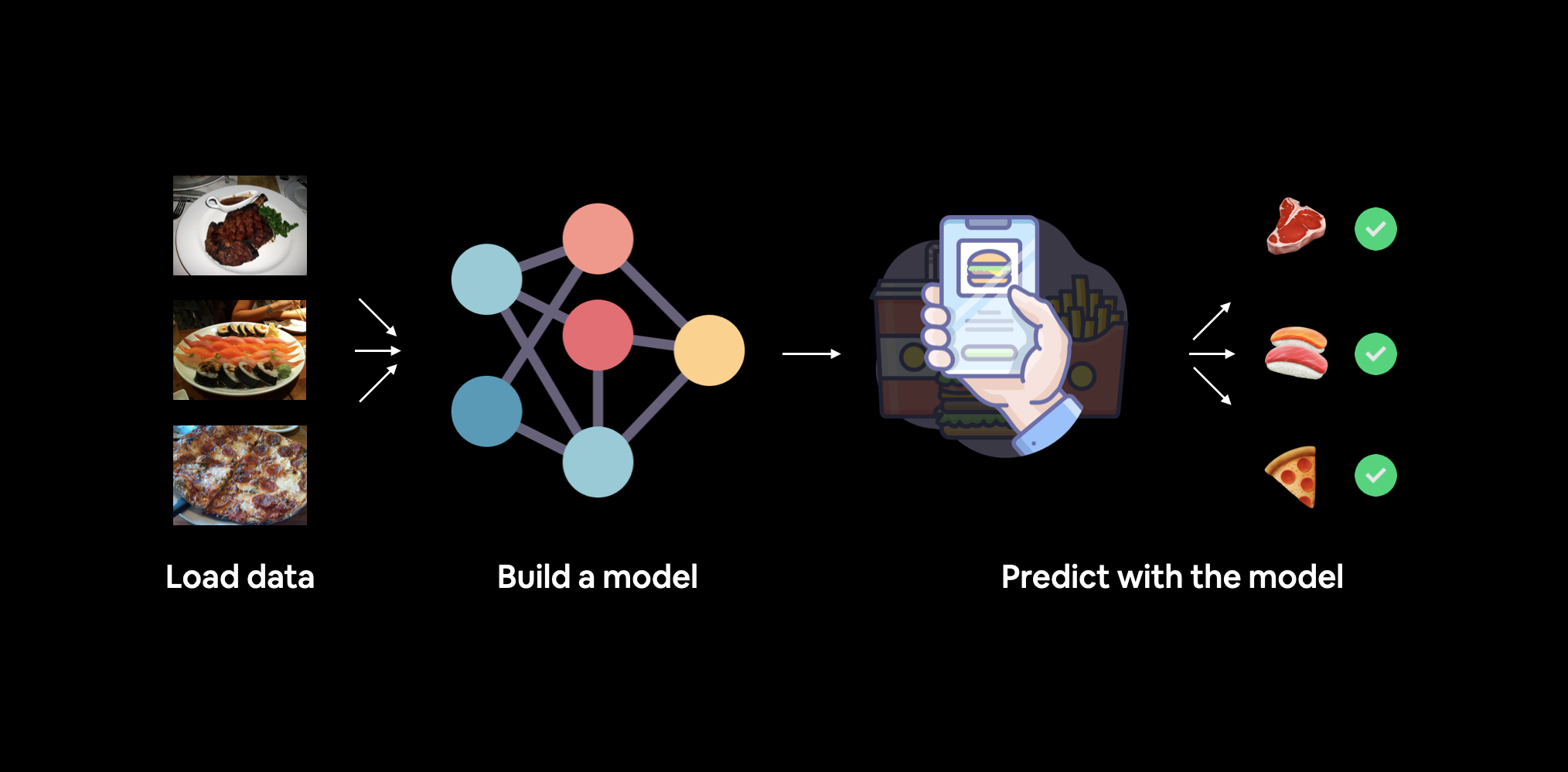

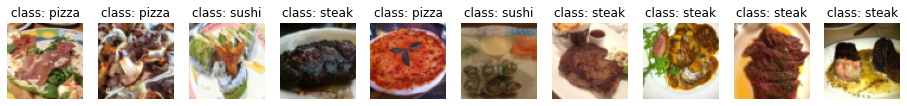

- Computer vision e CNN

- Custom datasets

- Menarello

Introduzione

Iniziamo con una domanda semplice, cos'è il Machine Learning? Beh... iniziamo dicendo come può essere utilizzata:

Deep Learning

Cerchiamo innanzitutto di capire cosa è il deep learning e come si relaziona con il machine learning e l'AI.

Tensore

Cosa è un tensore?

Il tensore è uno scalare (valore singolo), un vettore o una matrice multidimensionale, nella quale vengono storati i valori utilizzati da pytorch.

Nella pratica un tensore è la rappresntazione numerica in forma di array/matrici di un qualsiasi fenomeno esterno, sia esso per es. un'immagine, un suono o un range di valori numerici.

es:

# Scalar

cuda0 = torch.device('cuda:0')

scalar = torch.tensor(7, device=cuda0)

scalar

In questo caso istanzio uno scalare contenete il valore 7, da notere che, avendo un GPU vado a storare questo valore nella ram del GPU e non della cpu.

Di seguito un esempio di matrice

MATRIX = torch.tensor([[7, 8],

[9, 10]], device=cuda0)

MATRIX

Le dimensioni del tensore

NB: cerchiamo di capire bene la differenza tra la dimention e la size. La dimension indica quanti livelli "innestati" sono definiti all'interno della matrice, mentre la size indica il numero totali di righe-colonne presenti nella matrice.

Tensori randomici

Sono molto utili nelle fasi iniziali del training , di seguito un esempio per la creazione:

random_tensor = torch.rand(3,4)

tensor([[0.1207, 0.8136, 0.9750, 0.5804],

[0.4229, 0.6942, 0.4774, 0.5260],

[0.2809, 0.1866, 0.8354, 0.7496]])

# oppure altro esempio:

import torch

cuda0 = torch.device('cuda:0')

random_tensor = torch.rand(2,3,4, device=cuda0)

print (random_tensor)

tensor([[[0.2652, 0.6430, 0.7058, 0.3049],

[0.3983, 0.4169, 0.6228, 0.6622],

[0.6239, 0.7246, 0.1134, 0.9273]],

[[0.5454, 0.9085, 0.2009, 0.7056],

[0.5211, 0.6397, 0.9299, 0.1871],

[0.8542, 0.1733, 0.4378, 0.3836]]], device='cuda:0')

# dove si evince il tensore è di 2 righe ciascuna delle quali è composta

# a sua volta da una matri di 3 righe per 4 colonnese invce si vuole crare un tensore di zeroes.

zeros = torch.zeros(size=(3, 4))

Range di tesori

Use torch.arange(), torch.range() is deprecated

zero_to_ten_deprecated = torch.range(0, 10) # Note: this may return an error in the future

# Create a range of values 0 to 10

zero_to_ten = torch.arange(start=0, end=10, step=1)

print(zero_to_ten)

> tensor([0, 1, 2, 3, 4, 5, 6, 7, 8, 9])

se vuole creare un tensore che la le stesse dimensioni di un altro

ten_zeros = torch.zeros_like(input=zero_to_ten) # will have same shape

print(ten_zeros)

DTypes

è il datatype che definisce i dati contenuto nel tensore

per vedere i tipi di datatypes: https://pytorch.org/docs/stable/tensors.html#data-types

# Default datatype for tensors is float32

float_32_tensor = torch.tensor([3.0, 6.0, 9.0],

dtype=None, # defaults to None, which is torch.float32 or whatever datatype is passed

device=None, # defaults to None, which uses the default tensor type

requires_grad=False) # if True, operations perfromed on the tensor are recorded

float_32_tensor.shape, float_32_tensor.dtype, float_32_tensor.device

# Create a tensor

some_tensor = torch.rand(3, 4)

# Find out details about it

print(some_tensor)

print(f"Shape of tensor: {some_tensor.shape}")

print(f"Datatype of tensor: {some_tensor.dtype}")

print(f"Device tensor is stored on: {some_tensor.device}") # will default to CPU

tensor([[0.2423, 0.6624, 0.3201, 0.3021],

[0.7961, 0.9539, 0.0791, 0.8537],

[0.3491, 0.6429, 0.8308, 0.4690]])

Shape of tensor: torch.Size([3, 4])

Datatype of tensor: torch.float32

Device tensor is stored on: cpu

Forzare i tipi

Ovviamente è possibile cambiare il dtype per quei casi in cui le operazioni generano degli errori per es.

print (x, x.dtype)

> tensor([ 0, 10, 20, 30, 40, 50, 60, 70, 80, 90]) torch.int64

y= torch.mean(x.type(torch.float32))

Operazioni con i tensori

NB: nelle operazioni con i tensori, es. le moltiplicazioni, posso effettuarle tra tipi diversi. (es. int16 x float32)

Le operazioni basi sono le classiche: +,-,*,/ e moltiplicazione tra matrici:

# Create a tensor of values and add a number to it

tensor = torch.tensor([1, 2, 3])

tensor + 10

tensor([11, 12, 13])

# Multiply it by 10

tensor * 10

tensor([10, 20, 30])

#Notice how the tensor values above didn't end up being tensor([110, 120, 130]), this is because the values inside the tensor don't

#change unless they're reassigned.

# Tensors don't change unless reassigned

tensor

tensor([1, 2, 3])

#Let's subtract a number and this time we'll reassign the tensor variable.

# Subtract and reassign

tensor = tensor - 10

tensor

tensor([-9, -8, -7])

# Add and reassign

tensor = tensor + 10

tensor

tensor([1, 2, 3])

PyTorch also has a bunch of built-in functions like torch.mul() (short for multiplcation) and torch.add() to perform basic operations.

# Can also use torch functions

torch.multiply(tensor, 10)

tensor([10, 20, 30])

# Original tensor is still unchanged

tensor

tensor([1, 2, 3])

#However, it's more common to use the operator symbols like * instead of torch.mul()

# Element-wise multiplication (each element multiplies its equivalent, index 0->0, 1->1, 2->2)

print(tensor, "*", tensor)

print("Equals:", tensor * tensor)

tensor([1, 2, 3]) * tensor([1, 2, 3])

Equals: tensor([1, 4, 9])

Moltiplicazione tra matrici

One of the most common operations in machine learning and deep learning algorithms (like neural networks) is matrix multiplication.

PyTorch implements matrix multiplication functionality in the torch.matmul() method.

Regole della moltiplicaazione di matrici

Regola della dimensione interna

La dimensione interna DEVE essere la stessa, ovvero, se abbiamo una matrice (3,2) e un'altra matrice di (3,2)

la moltiplicazione genererà un errore in quanto le dimensioni interne non coincidono.

Per dimensione interna si intende (3,2) x (2,3) in questo caso il 2, dove nella prima matricie sono le colonne mentre nella secondo le righe. (nel primo esempio erano invece diverse e quindi non è possibile effettuare la moltiplicazione.

Regola della matrice risultante

La shape della matrice risultante è pari alle dimensini esterne delle due matrici.

Ovvero nel caso di matrici (2,3) x (3,2) che quindi soffisfano la regola della dimensione interna, la risultante sarà una matrice la cui dimensione sarà la dimensione esterna, quindi (2,2)

Come moltiplicare due matrici

Di seguito viene mostrato graficamente come moltiplicare due matrici:

....

Differenza tra "Element-wise multiplication" e "Matrix multiplication".

Element wise moltiplication moltiplica ogni elemento mentre invece matrix multiplication effettua il totale delle moltiplicatione delle matrici.

tensor variable with values [1, 2, 3]:

| Operation | Calculation | Code |

|---|---|---|

| **Element-wise multiplication** | `[1*1, 2*2, 3*3]` = `[1, 4, 9]` | `tensor * tensor` |

| **Matrix multiplication** | `[1*1 + 2*2 + 3*3]` = `[14]` | `tensor.matmul(tensor)` |

# Element-wise matrix multiplication

tensor * tensor

>tensor([1, 4, 9])

# Matrix multiplication

torch.matmul(tensor, tensor)

> tensor(14)

# Can also use the "@" symbol for matrix multiplication, though not recommended

tensor @ tensor

>tensor(14)

Manipolazione dello shape

Coonsideriamo il caso

tensor_A = torch.tensor([[1, 2],

[3, 4],

[5, 6]], dtype=torch.float32)

tensor_B = torch.tensor([[7, 10],

[8, 11],

[9, 12],

[13,14]], dtype=torch.float32)

se eftettuiamo la motiplicazione dei due, per le due regole sopra citate, verrà generato un errore in quanto la dimensione interna non matcha:

errore -> torch.matmul(tensor_A, tensor_B) in quanto abbiamo una moltiplicare di (3,2) x (4,2) che non coincidono internamente.

ma allora che fare? ebbene in questo caso possiamo far coincidere le dimensioni interne di uno dei due tensori utilizzando la funzione "transpose", come di seguito

torch.matmul(tensor_A, tensor_B.T) dove il metodo .T effettua la traspose del tensore B rendendolo compatibile con A, ovvero:

tensor([[ 7., 8., 9., 13.],

[10., 11., 12., 14.]])

che traspone la (4,2) in (2,4) e quindi l'output della moltiplicare sarà:

# effetto la moltiplicazione ora con la transposizione è diventato -> torch.Size([3, 2]) * torch.Size([2, 4])

torch.mm(tensor_A*tensor_A.T)

Output:

tensor([[ 27., 30., 33., 41.],

[ 61., 68., 75., 95.],

[ 95., 106., 117., 149.]])

Output shape: torch.Size([3, 4])

che soddispafa la prima regola (dimensione interna) e la seconda regola (dimensione tensore risultate pari alla dimensione esterna)

NOTA: per fare delle prove andare sul sito http://matrixmultiplication.xyz/

Aggregazione del tensore

Oltre alla moltiplicazione abbiamo altri tipi di operazioni comuni che possono essere effettuate sui tensori ovvero:

min, max, mean, sum, ed altro... che nella pratica si tratta di invocare il metodo dell'oggetto "torch" es. torch.mean(tensore)

NOTA: Può essere che questi metodi diano degli errori sui tipi, es il metodo mean non accetta un dtype long, per questo motivo il tipo può essere convertito "al volo" tramite il metodo type, es. torch.mean ( X.type(torch.float32) ) -> che lo casta a floating 32.

Posizionamento del min e del max

Se vogliamo sapere l'indice del valore minimo o massimo all'iterno del tensore allora toch ci mette a disposizione il metodo argmin es.

#Create a tensor

tensor = torch.arange(10, 100, 10)

print(f"Tensor: {tensor}")

# Returns index of max and min values

print(f"Index where max value occurs: {tensor.argmax()}")

print(f"Index where min value occurs: {tensor.argmin()}")

Tensor: tensor([10, 20, 30, 40, 50, 60, 70, 80, 90])

Index where max value occurs: 8

Index where min value occurs: 0

Reshaping, stacking, squeezing e un squeezing

Lo scopo di questi metodi è manipolare il tensore in modo da modificarne lo "shape" o la dimensione. Di seguito viene riportata una breve descrizione dei metodi.

| Metodo | Descrizione (online) |

|---|---|

| torch.reshape(input, shape) | Reshapes `input` to `shape` (if compatible), can also use `torch.Tensor.reshape()`. |

| torch.Tensor.view(shape) | Returns a view of the original tensor in a different `shape` but shares the same data as the original tensor. |

| torch.stack(tensors, dim=0) | **Concatenates** a sequence of `tensors` along a new dimension (`dim`), all `tensors` must be same size. |

| torch.squeeze(input) | Squeezes `input` to **remove** all the dimenions with value `1`. |

| torch.unsqueeze(input, dim) | Returns `input` with a dimension value of `1` **added** at `dim`. |

| torch.permute(input, dims) | Returns a *view* of the original `input` with its dimensions permuted (rearranged) to `dims`. |

creiamo un vettore con 9 valori:

# creo un vettore semplice

import torch

x = torch.arange(1., 10.)

x, x.shape

tensor([1., 2., 3., 4., 5., 6., 7., 8., 9.])

shape -> torch.Size([9])

Reshape

Nell'esempsio voglio convertire il tensore in una matrice di una riga per nove colonne, visto che il numero di elementi è compatibile con l'operazione.

ATTENZIONE che reshape deve essere compatibile con la dimensione.

Quindi:

y = x.reshape(9,1)

y varrà:

tensor([[1.],

[2.],

[3.],

[4.],

[5.],

[6.],

[7.],

[8.],

[9.]])

shape -> torch.Size([9, 1])

se inceve volessimo creare un tensore multidimensionale di una riga per nove colonne:

y = x.reshape(1,9)

``` tensor([[1., 2., 3., 4., 5., 6., 7., 8., 9.]])

shape -> torch.Size([1, 9])

##### View

La view è simile a reshape solo che l'output condivide la stessa area di memoria, in pratica modificando uno si modifica anche l'altro, es.

z = x.view(1,9)

\# questo comando modifica la colonna zero di tutte le righe (vale anche se abbiamo una sola riga)

z \[:,0\] = 5

a questo punto sia z che x puntano allo stesso valore (5) nella colonna zero

##### Stack

Concatena due o più tensori purchè abbiano la stessa dimensione e che siano in una lista. (es.

```python

tensor_one = torch.tensor([[1,2,3],[4,5,6]])

print(tensor_one)

tensor([[1, 2, 3],

[4, 5, 6]])

tensor_two = torch.tensor([[7,8,9],[10,11,12]])

tensor_tre = torch.tensor([[13,14,15],[16,17,18]])

#NB devono essere in una lista es. tensor_list = [tensor_one, tensor_two, tensor_tre] o direttamente come sotto

staked_tensor = torch.stack([tensor_one,tensor_two,tensor_tre])

print(staked_tensor.shape)

torch.Size([3, 2, 3])

print(staked_tensor)

tensor([[[ 1, 2, 3],

[ 4, 5, 6]],

[[ 7, 8, 9],

[10, 11, 12]],

[[13, 14, 15],

[16, 17, 18]]])

Squeeze e UnSqueeze

Lo squeeze rimuove tutte le dimensioni "singole" dal tensore, es:

import torch

# creo un array a (dimensione 0)

xx = torch.arange(1., 10.)

print (xx)

>tensor([1., 2., 3., 4., 5., 6., 7., 8., 9.])

# aggiungo una dimensione (dimensione 1)

xx = xx.reshape(1,9)

print (xx)

>tensor([[1., 2., 3., 4., 5., 6., 7., 8., 9.]])

#tolgo la dimensione che ho aggiunto (solo se dim 1)

print(xx.squeeze())

print (xx)

>tensor([1., 2., 3., 4., 5., 6., 7., 8., 9.])

#Con l'unsqueeze si aggiunga una singola dimensione

print(staked_tensor.squeeze())

>tensor([[[1., 2., 3., 4., 5., 6., 7., 8., 9.]]])

Permute

L'operazione permute permette di "switchare" una dimensione con l'altra, ovvero:

# creiamo un tensore di dimensione 3 di 224 x 224 x 3, che btw potrebbe

# rappresentare un'immagine dove le prime due dimensione sono i pixel mentre la terza il valore RGB

x_original = torch.rand(size=(224, 224, 3))

# la permute lavora per indici, nel caso specifico swppiamo il secondo indice ( è zero based) e lo

# mettimao al primo posto (zero) e così via

x_permuted = x_original.permute(2, 0, 1) # shifts axis 0->1, 1->2, 2->0

print(f"Previous shape: {x_original.shape}")

Previous shape: torch.Size([224, 224, 3])

print(f"New shape: {x_permuted.shape}")

New shape: torch.Size([3, 224, 224])

si noti quindi i valori delle dimensioni vengono "swappati" tra di loro secondo l'ordine definito dal medoto "permute"

ricordarsi inoltre che anche la permute lavora su una vista dei valori originali, con tutto ciò che comporta l'uso di una vista in torch

Indexing

L'indexing è utilizzato per estrapolare, navigare, i dati di un tensore, con pytorch è simile a quello di numpy.

es.

# Creo un tensore

import torch

x = torch.arange(1, 10).reshape(1, 3, 3)

x, x.shape

>tensor([[[1, 2, 3],

[4, 5, 6],

[7, 8, 9]]]

>torch.Size([1, 3, 3])

# target su primo elemento della matrice tridimensionale

x[0]

>tensor([[1, 2, 3],

[4, 5, 6],

[7, 8, 9]])

# target su primo elemento della matrice tridimensionale e di questo elemento il primo

x[0][0]

>tensor([1, 2, 3])

# target su primo elemento della matrice tridimensionale e di questo elemento il primo e del restante il primo

x[0][0][0]

>1

Selezionare tutti gli elementi di una dimensione

Per selezionare tutti gli elementi di una dimensione bisogna utilizzare il carattere ":"

Per selezionare un'altra dimensione bisogna utilizzare il carattere ","

Ovviamente sono in ordine di dimensione, la prima virgola sarà quella della dimensione zero, la seconda della prima, la terza della seconda e così via.

- per esempio voglio estrarre tutti i valori da tutte le dimensioni zero, il primo valore della dimensione uno.

import torch

x = torch.arange(1, 10).reshape(1, 3, 3)

x, x.shape

>tensor([[[1, 2, 3],

[4, 5, 6],

[7, 8, 9]]]) torch.Size([1, 3, 3])

x[: , 0]

> tensor([[1, 2, 3]])

- tutte le dimensini zero, e uno ma solo gli indice uno della seconda

x[:,:,1]

>tensor([[2, 5, 8]])

- tutti i valori della prima dimensione, ma solo il primo indice della prima e della seconda dimensione

x[:,1,1]

> tensor([5])

- l'indice zero della dimensione zero e delle dimensione uno, e tutti i valori della seconda dimensione

x[0, 0, :] # same as x[0][0]

> tensor([1, 2, 3])

- ritornare il valore '9'

tensor([[1, 2, 3],

[4, 5, 6],

[7, 8, 9]])

x[0,2,2]

- ritornare i valori 3,6,9

tensor([[1, 2, 3],

[4, 5, 6],

[7, 8, 9]])

x[0,:,2]

oopure

x[:,:,2]# Create a tensor

import torch

x = torch.arange(1, 28).reshape(3, 3, 3)

# x, x.shape

print(x)

>tensor([[[ 1, 2, 3],

[ 4, 5, 6],

[ 7, 8, 9]],

[[10, 11, 12],

[13, 14, 15],

[16, 17, 18]],

[[19, 20, 21],

[22, 23, 24],

[25, 26, 27]]])

print(x[:,0,2])

>tensor([ 3, 12, 21])

Pytorch tensors e Numpy

Numpy è molto utilizzato per elaborare i dati velocemente, accade però che questi dati debbano essere caricati in pytorch per essere dati in pasto alla rete neurale di turno, sia essa nella ram "tradizionale" che quella della GPU.

Un metodo utilizzabile è torch.from_numpy (mdarray) o vice versa torch.Tensor.numpy() es:

``` # da Numpy a tensor

import torch

import numpy as np

array = np.arange (1.0, 8,0)

tensor = torch.from_numpy (array)

print (array,tensor)array([1., 2., 3., 4., 5., 6., 7.]) tensor([1., 2., 3., 4., 5., 6., 7.], dtype=torch.float64)

Attenzione torch converte di defaut in dtype=torch.float64, se invece vogliamo forzare ad un altro tipo es. float32 allora dobbiamo utilizzare il metodo types es: tensor = torch.from_numpy (array).type(torch.float32)

```python

# da Tesor a Numpy

tensor = torch.ones(7)

numpy_tensor = tensor.numpy()

print (array,tensor)

>tensor([1., 1., 1., 1., 1., 1., 1.]),

>array([1., 1., 1., 1., 1., 1., 1.], dtype=float32))

Attenzione in questo caso passiamo da float64 di Torch a float32 di numpy, quindi con possibile perdita di informazioni.

Riproducibilità

Una rete neurale in genere si sviluppa iniziando con valori casuali, poi effettua sempre più operazioni sui tensori che andranno ad aggiornare i numeri, prima casuali, affinandone i volori a quelli utili per lo scopo previsto.

Se desideriamo generare dei numeri "random" che siano sempre gli stessi :) possiamo utilizzare una modalità "random seed" in modo che il caso possa essere riprodotto con gli stessi valori "random" più volte.

import torch

import random

# # Set the random seed

RANDOM_SEED=42 # try changing this to different values and see what happens to the numbers below

torch.manual_seed(seed=RANDOM_SEED)

random_tensor_C = torch.rand(3, 4)

# Have to reset the seed every time a new rand() is called

# Without this, tensor_D would be different to tensor_C

torch.random.manual_seed(seed=RANDOM_SEED) # try commenting this line out and seeing what happens

random_tensor_D = torch.rand(3, 4)

print(f"Tensor C:\n{random_tensor_C}\n")

print(f"Tensor D:\n{random_tensor_D}\n")

print(f"Does Tensor C equal Tensor D? (anywhere)")

print (random_tensor_C == random_tensor_D)

> tensor([[True, True, True, True],

[True, True, True, True],

[True, True, True, True]])

Torch on GPU

I tensori e gli oggetti pytorch possono essere eseguiti sia dalla CPU che nella GPU grazie per es. ai CUDA di NVidia.

Per verificare se la GPU è visibile da Torch eseguire il comando:

# Check for GPU

import torch

torch.cuda.is_available()

> true

a questo punto possiamo configurare torch in mode giri nella GPU o nella CPU tramite il comando:

# Set device type

device = "cuda" if torch.cuda.is_available() else "cpu"

some_tensor = some_tensor.to(device)

e vediamo le due possibili casistiche:

# Create tensor (default on CPU)

tensor = torch.tensor([1, 2, 3])

# Tensor not on GPU

print(tensor, tensor.device)

>tensor([1, 2, 3]) cpu

# Move tensor to GPU (if available)

tensor_on_gpu = tensor.to(device)

print (tensor_on_gpu,tensor_on_gpu, tensor_on_gpu.device)

>tensor([1, 2, 3], device='cuda:0') cuda:0

oppure

# creo due tensori random nella GPU

tensor_A = torch.rand(size=(2,3)).to(device)

tensor_B = torch.rand(size=(2,3)).to(device)

tensor_A, tensor_B

se poi vogliamo portare i valori dalla GPU alla GPU dobbiamo fare attenzione in quanto non possiamo semplicemente:

``` # If tensor is on GPU, can't transform it to NumPy (this will error) tensor_on_gpu.numpy()

TypeError Traceback (most recent call last) Cell In[13], line 2 1 # If tensor is on GPU, can't transform it to NumPy (this will error) ----> 2 tensor_on_gpu.numpy()

TypeError: can't convert cuda:0 device type tensor to numpy. Use Tensor.cpu() to copy the tensor to host memory first.

dobbiamo invece:

Instead, copy the tensor back to cpu

tensor_back_on_cpu = tensor_on_gpu.cpu().numpy() print (tensor_back_on_cpu)

array([1, 2, 3], dtype=int64)

##### Esercizi

All of the exercises are focused on practicing the code above.

You should be able to complete them by referencing each section or by following the resource(s) linked.

**Resources:**

- [Exercise template notebook for 00](https://github.com/mrdbourke/pytorch-deep-learning/blob/main/extras/exercises/00_pytorch_fundamentals_exercises.ipynb).

- [Example solutions notebook for 00](https://github.com/mrdbourke/pytorch-deep-learning/blob/main/extras/solutions/00_pytorch_fundamentals_exercise_solutions.ipynb) (try the exercises *before* looking at this).

1. Documentation reading - A big part of deep learning (and learning to code in general) is getting familiar with the documentation of a certain framework you're using. We'll be using the PyTorch documentation a lot throughout the rest of this course. So I'd recommend spending 10-minutes reading the following (it's okay if you don't get some things for now, the focus is not yet full understanding, it's awareness). See the documentation on [`torch.Tensor`](https://pytorch.org/docs/stable/tensors.html#torch-tensor) and for [`torch.cuda`](https://pytorch.org/docs/master/notes/cuda.html#cuda-semantics).

2. Create a random tensor with shape `(7, 7)`.

3. Perform a matrix multiplication on the tensor from 2 with another random tensor with shape `(1, 7)` (hint: you may have to transpose the second tensor).

4. Set the random seed to `0` and do exercises 2 & 3 over again.

5. Speaking of random seeds, we saw how to set it with `torch.manual_seed()` but is there a GPU equivalent? (hint: you'll need to look into the documentation for `torch.cuda` for this one). If there is, set the GPU random seed to `1234`.

6. Create two random tensors of shape `(2, 3)` and send them both to the GPU (you'll need access to a GPU for this). Set `torch.manual_seed(1234)` when creating the tensors (this doesn't have to be the GPU random seed).

7. Perform a matrix multiplication on the tensors you created in 6 (again, you may have to adjust the shapes of one of the tensors).

8. Find the maximum and minimum values of the output of 7.

9. Find the maximum and minimum index values of the output of 7.

10. Make a random tensor with shape `(1, 1, 1, 10)` and then create a new tensor with all the `1` dimensions removed to be left with a tensor of shape `(10)`. Set the seed to `7` when you create it and print out the first te

**Extra-curriculum**

<div class="cell text_cell rendered selected" id="bkmrk-spend-1-hour-going-t" tabindex="2"><div class="inner_cell"><div class="text_cell_render rendered_html" dir="ltr" tabindex="-1">- Spend 1-hour going through the [PyTorch basics tutorial](https://pytorch.org/tutorials/beginner/basics/intro.html) (I'd recommend the [Quickstart](https://pytorch.org/tutorials/beginner/basics/quickstart_tutorial.html) and [Tensors](https://pytorch.org/tutorials/beginner/basics/tensorqs_tutorial.html) sections).

- To learn more on how a tensor can represent data, see this video: [What's a tensor?](https://youtu.be/f5liqUk0ZTw)

</div></div></div>

Workflow + regressione lineare

Introduzione

Iniziamo a trattare la regressione che nella pratica risulta essere la predizione di un numero a differenza per es. della classificazione che tratta la previsione di un "tipo", es. cats vs dogs.

In questa lezione vedremo un tipo "torch workflow" in salsa "vanilla", basico ma utile per comprendere gli step logici. Di seguito una rappresentazione grafica del flow:

| **Topic** | **Contents** |

|---|---|

| 1 Getting data ready** | Data can be almost anything but to get started we're going to create a simple straight line |

| 2 Building a model** | Here we'll create a model to learn patterns in the data, we'll also choose a **loss function**, **optimizer** and build a **training loop**. |

| 3 Fitting the model to data (training)** | We've got data and a model, now let's let the model (try to) find patterns in the (**training**) data. |

| 4 Making predictions and evaluating a model (inference)** | Our model's found patterns in the data, let's compare its findings to the actual (**testing**) data. |

| 5 Saving and loading a model** | You may want to use your model elsewhere, or come back to it later, here we'll cover that. |

| 6 Putting it all together** | Let's take all of the above and combine it. |

Torch.NN

Per costruire una rete neurale possiamo iniziare da torch.NN dove per .nn si vuole indicare Neural Network

Preparazione dei dati

La fase iniziare e una delle più importanti nel ML è la preparazione dei dati, es:

Gli step principali nella preparazione dei dati sono:

- trasforare i dati in una rappresentazione numerica

- costruire un modello che impari o scopra dei "pattern" nella rappresentazione numerica definita per il modello che vogliamo analizzare

Inziamo utilizzando la classica regressione lineare utilizzando la formula base y = wx + b, dove b sono i bias (detta intercetta) e w i pesi o coefficiente angolare. Per un approfondimento sulla regressione lineare vedi corso https://cms.marcocucchi.it/books/machine-learing/page/regressione-lineare

Ma andiamo al codice

# settiamo in parametri dell'equazione

weight = 0.7

bias = 0.3

# creiamo i dati

start = 0

end = 1

step = 0.02

# agigungo una dimensione extra tramite l'unsqueeze

X = torch.arange(start, end, step).unsqueeze(dim=1)

y = weight * X + bias

X.shape, X[:10], y[:10]

>(torch.Size([50, 1]),

(tensor([[0.0000],

[0.0200],

[0.0400],

[0.0600],

[0.0800],

[0.1000],

[0.1200],

[0.1400],

[0.1600],

[0.1800]]),

tensor([[0.3000],

[0.3140],

[0.3280],

[0.3420],

[0.3560],

[0.3700],

[0.3840],

[0.3980],

[0.4120],

[0.4260]]))

Nell'esempio sopra riportato andremo a creare i dati relativi ad una semplice equazione lineare che verranno inviati alla rete neurale per identificare il pattern che più si avvicina all'equazione Y= vw + b che li ha originati

Training, Validation e Test sets

Uno dei concetti più importanti nel ML è la suddivisione dei dati in tre grupi:

| Split | Purpose | Amount of total data | How often is it used? |

|---|---|---|---|

| **Training set** | sono i dati sui quali il Pytoch si "allena" per trovare il modello | ~60-80% | Always |

| **Validation set** | Non sempre utilizzato, nella pratica serve per effettuare una validazione interna del training. Da notare che questi non vengono utilizzati nella fase di training, servono solo per una validazione del modello in fase di training. | ~10-20% | Often but not always |

| **Testing set** | Validazione finare del modello. | ~10-20% | Always |

Come splittare i dati i dati in training e testing:

# Create train/test split

train_split = int(0.8 * len(X)) # 80% of data used for training set, 20% for testing

X_train, y_train = X[:train_split], y[:train_split]

X_test, y_test = X[train_split:], y[train_split:]

len(X_train), len(y_train), len(X_test), len(y_test)

in questo modo dividiamo i dati dove l'80% sono dedicati al training e il restante 20% per la fase di test

Visualizziamo ora i dati:

def plot_predictions(train_data=X_train,

train_labels=y_train,

test_data=X_test,

test_labels=y_test,

predictions=None):

"""

Plots training data, test data and compares predictions.

"""

plt.figure(figsize=(10, 7))

# Plot training data in blue

plt.scatter(train_data, train_labels, c="b", s=4, label="Training data")

# Plot test data in green

plt.scatter(test_data, test_labels, c="g", s=4, label="Testing data")

if predictions is not None:

# Plot the predictions in red (predictions were made on the test data)

plt.scatter(test_data, predictions, c="r", s=4, label="Predictions")

# Show the legend

plt.legend(prop={"size": 14});

plot_predictions();

e l'output risulta:

in blu i dati di traing, mentre in verde quelli di test.

Ora creiamo il modello:

# Creiamo un classe di regressione lineare che eredita da nn.Module

class LinearRegressionModel(nn.Module):

# inizializzazione delle rete neurale

def __init__(self):

super().__init__()

# normalmente le w e b sono più complesso di questo caso...

# il nome di questo tipo di variabili è arbitrario

self.weights = nn.Parameter(torch.randn(1, # generiamo un (1) tensore con un valore randomico

dtype=torch.float), # <- PyTorch preferisce utilizzare float32 by default

requires_grad=True) # pytoch aggiornerà il parametro tramite il backpropagation e discesa del gradiente

# il nome di questo tipo di variabili è arbitrario

self.bias = nn.Parameter(torch.randn(1, # generiamo un (1) tensore con un valore randomico

dtype=torch.float), # <- PyTorch preferisce utilizzare float32 by default

requires_grad=True) # pytoch aggiornerà il parametro tramite il backpropagation e discesa del gradiente

# propagazione di tipo "forward"

def forward(self, x: torch.Tensor) -> torch.Tensor: # <- "x" input data (training/testing features)

return self.weights * x + self.bias # <- questa è la formula della regressione lineare (y = m*x + b)

La classe torch.NN è la base per la creazione dei "grafi di neuroni", questa classe effettua due macro tipologie di operazioni, ovvero:

- la discesa del gradiente

- la Backpropagation

tenendo traccia della variazione dei pesi e dei bias.

Il metodo "torch.randn" può generare un tensore il cui shape è passato in input es.

torch.randn(2, 3)

PyTorch model building essentials

Le componenti princiali (più o meno) per creare una rete neurale in Pytorch sono:

torch.nn, torch.optim, torch.utils.data.Dataset and torch.utils.data.DataLoader. For now, we'll focus on the first two and get to the other two later (though you may be able to guess what they do).

| PyTorch module | What does it do? |

|---|---|

| torch.nn | Contains all of the building blocks for computational graphs (essentially a series of computations executed in a particular way). |

| torch.nn.Parameter | Stores tensors that can be used with `nn.Module`. If `requires_grad=True` gradients (used for updating model parameters via [**gradient descent**](https://ml-cheatsheet.readthedocs.io/en/latest/gradient_descent.html)) are calculated automatically, this is often referred to as "autograd". |

| torch.nn.Module | The base class for all neural network modules, all the building blocks for neural networks are subclasses. If you're building a neural network in PyTorch, your models should subclass `nn.Module`. Requires a `forward()` method be implemented. |

| torch.optim | Contains various optimization algorithms (these tell the model parameters stored in `nn.Parameter` how to best change to improve gradient descent and in turn reduce the loss). |

| def forward() | All `nn.Module` subclasses require a `forward()` method, this defines the computation that will take place on the data passed to the particular `nn.Module` (e.g. the linear regression formula above).

Questa classe in genere va sempre implementata |

If the above sounds complex, think of like this, almost everything in a PyTorch neural network comes from torch.nn,

nn.Modulecontains the larger building blocks (layers)nn.Parametercontains the smaller parameters like weights and biases (put these together to makenn.Module(s))forward()tells the larger blocks how to make calculations on inputs (tensors full of data) withinnn.Module(s)torch.optimcontains optimization methods on how to improve the parameters withinnn.Parameterto better represent input data

Visualizziamo i valori w e b prima dell'elaboraizone:

# Set manual seed since nn.Parameter are randomly initialzied

torch.manual_seed(42)

# Create an instance of the model (this is a subclass of nn.Module that contains nn.Parameter(s))

model_0 = LinearRegressionModel()

# Check the nn.Parameter(s) within the nn.Module subclass we created

list(model_0.parameters())

>[Parameter containing:

tensor([0.3367], requires_grad=True),

# vediamo la lista dei parametri

model_0.state_dict()

>OrderedDict([('weights', tensor([0.3367])), ('bias', tensor([0.1288]))])

proviamo a fare delle predizioni senza aver fatto il training giusto per vedere come si comporta il modello.

Per fare delle predizioni si utilizza il medoto .inference_mode():

# Make predictions with model

# con torch.inference_mode() facciamo in modo non si salvi i parametri che normalmente vengono

# utilizzati nella fase di training, cosa inutile durante la predizione in quanto il training

# dovrebbe essere già stato effettuato. In soldoni migliori performace durante la fare predittiva

with torch.inference_mode():

y_preds = model_0(X_test)

# Check the predictions

print(f"Number of testing samples: {len(X_test)}")

print(f"Number of predictions made: {len(y_preds)}")

print(f"Predicted values:\n{y_preds}")

Number of testing samples: 10

Number of predictions made: 10

Predicted values:

tensor([[0.3982],

[0.4049],

[0.4116],

[0.4184],

[0.4251],

[0.4318],

[0.4386],

[0.4453],

[0.4520],

[0.4588]])

# proviamo a visualizzare i valori della previsione

plot_predictions(predictions=y_preds)

e come si bene notare i valori predetti (rosso) "poco ci azzeccano" con i valori originali... quindi le predizioni sono praticamente random.

Training

Loss function

Prima di trattare il training per se vediamo di capire come misura quanto il modello si avvicina ai valori attesi o ideali, per effettuare questo controllo viene utilizzata la "loss function" o "cost function". (vedo https://pytorch.org/docs/stable/nn.html#loss-functions)

Nella pratica uno dei metodi più basici è misurare la distanza tra gli attesi e i predetti.

Optimizer

L'optimizer serve per ottimizzare i valori predetti in modo che si avvicinino sempre di più ai valori ideali, quindi per migliorare la loss function. (i cui delta vengono ritornati dalla "loss function", in modo che la loss function stessa indichi un miglioramento della predizione)

Nello specifico per pytorch servirà un training loop e un test loop.

Creare una loss function e un optimizer

| Function | What does it do? | Where does it live in PyTorch? | Common values |

|---|---|---|---|

| **Loss function** | Measures how wrong your models predictions (e.g. `y_preds`) are compared to the truth labels (e.g. `y_test`). Lower the better.

vedi tabella delle loss functions: |

PyTorch has plenty of built-in loss functions in [`torch.nn`](https://pytorch.org/docs/stable/nn.html#loss-functions). | Mean absolute error (MAE) for regression problems ([`torch.nn.L1Loss()`](https://pytorch.org/docs/stable/generated/torch.nn.L1Loss.html)). Binary cross entropy for binary classification problems ([`torch.nn.BCELoss()`](https://pytorch.org/docs/stable/generated/torch.nn.BCELoss.html)). |

| **Optimizer** | Tells your model how to update its internal parameters to best lower the loss.

vedi lista degli optimizers |

You can find various optimization function implementations in [`torch.optim`](https://pytorch.org/docs/stable/optim.html). | Stochastic gradient descent ([`torch.optim.SGD()`](https://pytorch.org/docs/stable/generated/torch.optim.SGD.html#torch.optim.SGD)). Adam optimizer ([`torch.optim.Adam()`](https://pytorch.org/docs/stable/generated/torch.optim.Adam.html#torch.optim.Adam)). |

Esistono varie famiglie di "loss function" a seconda del tipo di elaborazione, per la predizioni di valori numerici è possibile utilizzare la Mean absolute error (MAE, in PyTorch: torch.nn.L1Loss) che miura la differenze in valori assoluti tra due punti che nel nostro caso sono le "prediction" e le "label" (che sono i valori attesi) per poi calcolarne il valore medio.

Di seguito una rappresentazione grafica dello MAE, dove si evidenzia il calcolo medio della differenza in valore assoulto tra valori attesi e valori predetti.

quindi:

# creiamo una loss function

loss_fn = nn.L1Loss() # MAE

# creiamo un optimizer, scegliamo il classico Stocastic Gradient Descent

optimizer = torch.optim.SGD(params=model_0.parameters(), # passiamo i parametri da ottimizzare (in questo caso "w" e "b"

lr=0.01) # settiamo il passo per il calcolo del gradiente, più piccolo = più tempo

di seguito gli step logici della fare si training:

PyTorch training loop

For the training loop, we'll build the following steps:

| Number | Step name | What does it do? | Code example |

|---|---|---|---|

| 1 | Forward pass | The model goes through all of the training data once, performing its `forward()` function calculations. | `model(x_train)` |

| 2 | Calculate the loss | The model's outputs (predictions) are compared to the ground truth and evaluated to see how wrong they are. | `loss = loss_fn(y_pred, y_train)` |

| 3 | Zero gradients | The optimizers gradients are set to zero (they are accumulated by default) so they can be recalculated for the specific training step. | `optimizer.zero_grad()` |

| 4 | Perform backpropagation on the loss | Computes the gradient of the loss with respect for every model parameter to be updated (each parameter with `requires_grad=True`). This is known as **backpropagation**, hence "backwards". | `loss.backward()` |

| 5 | Update the optimizer (**gradient descent**) | Update the parameters with `requires_grad=True` with respect to the loss gradients in order to improve them. | `optimizer.step()` |

l'algoritmo quindi si può delineare come:

di seguito l'algoritmo:

# forzo il seed per ottenere risultati identici al

torch.manual_seed(42)

# setto le epoche, ogni epoca è un passaggio in "foward propagation" dei pesi attraverso la rete neurale

# dall'input layer all'ouout.

epochs = 100

# creo delle liste che conterranno i valori di loss per tenerne traccia durante le varie epche

train_loss_values = []

test_loss_values = []

epoch_count = []

for epoch in range(epochs):

### Training

# 0. imposto la modalità in Training (da fare ad ogni epoca)

model_0.train()

# 1. passo i dati di training al modello il quale internamente invocherè il metoto forward() definito

# quanto è stata implementata la classe che estende pytorch. Ottengo i dati che andranno poi comprati

# dalla loss per ottenerne in valore medio assoluto.

y_pred = model_0(X_train)

# print(y_pred)

# 2. calcolo la loss utilizzando la funzione definita precedentemmente.

loss = loss_fn(y_pred, y_train)

# 3. reinizializzo l'optimizer in quanto tende ad accumulare i valori

optimizer.zero_grad()

# 4. effettua la back propagation, nella pratica Pytorch tiene traccia dei valori associati alla discesa del gradiente

# Quindi calcola la derivata parziale per determinare il minimo della curva dei delta tra valori predetti e valori di test

loss.backward()

# 5. ottimizza i parametri (una sola volta) e in base al valore "lr".

# NB: cambia quindi i valori dei tensori per cercare di farli avvicinare ai valori ottimali

optimizer.step()

### Testing

# indico a Pytrch che la fase di training è terminata e che ora devo valutare i parametri e paragonarli con i valori attesi

model_0.eval()

# predico i valori in

with torch.inference_mode():

# 1. Forward pass on test data

test_pred = model_0(X_test)

# 2. Caculate loss on test data

test_loss = loss_fn(test_pred, y_test.type(torch.float)) # predictions come in torch.float datatype, so comparisons need to be done with tensors of the same type

# Print out what's happening

if epoch % 10 == 0:

epoch_count.append(epoch)

# i valori vengono convertiti in numpy in quanto sono dei tensori pytorch

train_loss_values.append(loss.numpy())

test_loss_values.append(test_loss.numpy())

print(f"Epoch: {epoch} | MAE Train Loss: {loss} | MAE Test Loss: {test_loss} ")

e l'output sarà:

print (list(model_0.parameters()),model_0.state_dict())

Epoch: 0 | MAE Train Loss: 0.31288138031959534 | MAE Test Loss: 0.48106518387794495 delta: 0.1681838035583496

Epoch: 10 | MAE Train Loss: 0.1976713240146637 | MAE Test Loss: 0.3463551998138428 delta: 0.14868387579917908

Epoch: 20 | MAE Train Loss: 0.08908725529909134 | MAE Test Loss: 0.21729660034179688 delta: 0.12820935249328613

Epoch: 30 | MAE Train Loss: 0.053148526698350906 | MAE Test Loss: 0.14464017748832703 delta: 0.09149165451526642

Epoch: 40 | MAE Train Loss: 0.04543796554207802 | MAE Test Loss: 0.11360953003168106 delta: 0.06817156076431274

Epoch: 50 | MAE Train Loss: 0.04167863354086876 | MAE Test Loss: 0.09919948130846024 delta: 0.057520847767591476

Epoch: 60 | MAE Train Loss: 0.03818932920694351 | MAE Test Loss: 0.08886633068323135 delta: 0.05067700147628784

Epoch: 70 | MAE Train Loss: 0.03476089984178543 | MAE Test Loss: 0.0805937647819519 delta: 0.04583286494016647

Epoch: 80 | MAE Train Loss: 0.03132382780313492 | MAE Test Loss: 0.07232122868299484 delta: 0.040997400879859924

Epoch: 90 | MAE Train Loss: 0.02788739837706089 | MAE Test Loss: 0.06473556160926819 delta: 0.03684816509485245

Epoch: 100 | MAE Train Loss: 0.024458957836031914 | MAE Test Loss: 0.05646304413676262 delta: 0.032004088163375854

Epoch: 110 | MAE Train Loss: 0.021020207554101944 | MAE Test Loss: 0.04819049686193466 delta: 0.027170289307832718

Epoch: 120 | MAE Train Loss: 0.01758546568453312 | MAE Test Loss: 0.04060482233762741 delta: 0.02301935665309429

Epoch: 130 | MAE Train Loss: 0.014155393466353416 | MAE Test Loss: 0.03233227878808975 delta: 0.018176885321736336

Epoch: 140 | MAE Train Loss: 0.010716589167714119 | MAE Test Loss: 0.024059748277068138 delta: 0.01334315910935402

Epoch: 150 | MAE Train Loss: 0.0072835334576666355 | MAE Test Loss: 0.016474086791276932 delta: 0.009190553799271584

Epoch: 160 | MAE Train Loss: 0.0038517764769494534 | MAE Test Loss: 0.008201557211577892 delta: 0.004349780734628439

Epoch: 170 | MAE Train Loss: 0.008932482451200485 | MAE Test Loss: 0.005023092031478882 delta: -0.003909390419721603

Epoch: 180 | MAE Train Loss: 0.008932482451200485 | MAE Test Loss: 0.005023092031478882 delta: -0.003909390419721603

[Parameter containing:

tensor([0.6990], requires_grad=True), Parameter containing:

tensor([0.3093], requires_grad=True)] OrderedDict([('weights', tensor([0.6990])), ('bias', tensor([0.3093]))])

Mostriamo il grafico dei loss sul training e sui dati di testing

# Plot the loss curves

plt.plot(epoch_count, train_loss_values, label="Train loss")

plt.plot(epoch_count, test_loss_values, label="Test loss")

plt.title("Training and test loss curves")

plt.ylabel("Loss")

plt.xlabel("Epochs")

plt.legend();

e vediamo il grafico dei valori predetti vs i valori utilizzati per il traing

si può notare che dopo 180 epoche di training il modello riesce a predirre valori molto simili a quelli utilizzati per il training.

Salvare e caricare i parametri del modello

Dopo avere trovato i valori che meglio rappresentano il modello che vogliamo riprodurre vogliamo salvare i valori della rete neurale in modo da poterli ricaricare in un secondo momento senza dover riallenare la rete. Pytorch mette a disposizioni i metodo save e load per salvare su file system i parametri.

| PyTorch method | What does it do? |

|---|---|

| torch.save | Saves a serialzed object to disk using Python's [`pickle`](https://docs.python.org/3/library/pickle.html) utility. Models, tensors and various other Python objects like dictionaries can be saved using `torch.save`. |

| torch.load) | Uses `pickle`'s unpickling features to deserialize and load pickled Python object files (like models, tensors or dictionaries) into memory. You can also set which device to load the object to (CPU, GPU etc). |

| torch.nn.Module.load_state_dict | Loads a model's parameter dictionary (`model.state_dict()`) using a saved `state_dict()` object |

from pathlib import Path

# 1. Create models directory

MODEL_PATH = Path("C:/Users/userxx/Desktop")

MODEL_PATH.mkdir(parents=True, exist_ok=True)

# 2. Create model save path

MODEL_NAME = "01_pytorch_workflow_model_0.pth"

MODEL_SAVE_PATH = MODEL_PATH / MODEL_NAME

# 3. Save the model state dict

print(f"Saving model to: {MODEL_SAVE_PATH}")

torch.save(obj=model_0.state_dict(), # only saving the state_dict() only saves the models learned parameters

f=MODEL_SAVE_PATH)

verrà quindi creato un file con i bias e i weights, per caricare il modello invce:

# Instantiate a new instance of our model (this will be instantiated with random weights)

loaded_model_0 = LinearRegressionModel()

# Load the state_dict of our saved model (this will update the new instance of our model with trained weights)

loaded_model_0.load_state_dict(torch.load(f=MODEL_SAVE_PATH))

e provare il modello caricato:

# 1. Put the loaded model into evaluation mode

loaded_model_0.eval()

# 2. Use the inference mode context manager to make predictions

with torch.inference_mode():

loaded_model_preds = loaded_model_0(X_test) # perform a forward pass on the test data with the loaded model

Evoluzione del modello/uso della GPU

Creiamo ora un modello in grado di gestire un numero significativamente maggiore di layers e nuroni configurandoli più facilmente:

# Subclass nn.Module to make our model

class LinearRegressionModelV2(nn.Module):

def __init__(self):

super().__init__()

# utilizziamo un layer di quelli predefiniti da pytorch

# questa volta definiamo una semplice rete neurale fatta di un input layer e un output layer

# il modello libeare si basa sulla classica formula y = w*x + b

self.linear_layer = nn.Linear(in_features=1,

out_features=1)

# Definiamo la "forward computation" dove i valori i input "scorrono" attraverso

# la rete neurale defininta nel costruttore della classe

def forward(self, x: torch.Tensor) -> torch.Tensor:

return self.linear_layer(x)

# setto il seed per facilitare il check dei paramertri

torch.manual_seed(42)

model_1 = LinearRegressionModelV2()

print( model_1)

>LinearRegressionModelV2( (linear_layer): Linear(in_features=1, out_features=1, bias=True) )

print( model_1.state_dict())

>OrderedDict([('linear_layer.weight', tensor([[0.7645]])), ('linear_layer.bias', tensor([0.8300]))])

vediamo di forzare l'uso della GPU, se presente:

# Setup device agnostic code

device = "cuda" if torch.cuda.is_available() else "cpu"

print(f"Using device: {device}")

> Using device: cuda

# Check model device

next(model_1.parameters()).device

>device(type='cpu')

si evince che di default viene la utilizzata la CPU, il nostro intente invece è utilizzare la GPU se presente e creare un sistema "agnostico" in grado di sfruttare le risorse al meglio, per cui settiamo il decice migliore:

# Set model to GPU if it's availalble, otherwise it'll default to CPU

model_1.to(device) # the device variable was set above to be "cuda" if available or "cpu" if not

next(model_1.parameters()).device

# ora utilizza la GPU

>device(type='cuda', index=0)

ripetiamo il training con il nuovo modello:

# Create loss function

loss_fn = nn.L1Loss()

# Create optimizer

optimizer = torch.optim.SGD(params=model_1.parameters(), # optimize newly created model's parameters

lr=0.01)

torch.manual_seed(42)

# Set the number of epochs

epochs = 1000

# !!!!!!!!!!!!!

# Put data on the available device

# Without this, error will happen (not all model/data on device)

# !!!!!!!!!!!!!

X_train = X_train.to(device)

X_test = X_test.to(device)

y_train = y_train.to(device)

y_test = y_test.to(device)

for epoch in range(epochs):

### Training

model_1.train() # train mode is on by default after construction

# 1. Forward pass

y_pred = model_1(X_train)

# 2. Calculate loss

loss = loss_fn(y_pred, y_train)

# 3. Zero grad optimizer

optimizer.zero_grad()

# 4. Loss backward

loss.backward()

# 5. Step the optimizer

optimizer.step()

### Testing

model_1.eval() # put the model in evaluation mode for testing (inference)

# 1. Forward pass

with torch.inference_mode():

test_pred = model_1(X_test)

# 2. Calculate the loss

test_loss = loss_fn(test_pred, y_test)

if epoch % 100 == 0:

print(f"Epoch: {epoch} | Train loss: {loss} | Test loss: {test_loss}")

e l'output:

# Find our model's learned parameters

from pprint import pprint # pprint = pretty print, see: https://docs.python.org/3/library/pprint.html

print("The model learned the following values for weights and bias:")

pprint(model_1.state_dict())

print("\nAnd the original values for weights and bias are:")

print(f"weights: {weight}, bias: {bias}")

The model learned the following values for weights and bias:

OrderedDict([('linear_layer.weight', tensor([[0.6968]], device='cuda:0')),

('linear_layer.bias', tensor([0.3025], device='cuda:0'))])

And the original values for weights and bias are:

weights: 0.7, bias: 0.3

Fare delle previsioni

# Turn model into evaluation mode

model_1.eval()

# Make predictions on the test data

with torch.inference_mode():

y_preds = model_1(X_test)

print(y_preds)

tensor([[0.8600],

[0.8739],

[0.8878],

[0.9018],

[0.9157],

[0.9296],

[0.9436],

[0.9575],

[0.9714],

[0.9854]], device='cuda:0')

Facciamo il plot ma attenzione che i tensori sono nella GPU mentre la funzione di plot lavora con la CPU (numpy), bisognerà quindi trasferire i valori in numpy primna di plottarli.

plot_predictions(predictions=y_preds) # -> non funziona in quanto i dati sono nella GPU

>TypeError: can't convert cuda:0 device type tensor to numpy. Use Tensor.cpu() to copy the tensor to host memory first.

# Put data on the CPU and plot it

plot_predictions(predictions=y_preds.cpu())

Salvare il modello

from pathlib import Path

# 1. Create models directory

MODEL_PATH = Path("path alla directoty dei modelli")

MODEL_PATH.mkdir(parents=True, exist_ok=True)

# 2. Create model save path

MODEL_NAME = "01_pytorch_workflow_model_1.pth"

MODEL_SAVE_PATH = MODEL_PATH / MODEL_NAME

# 3. Save the model state dict

print(f"Saving model to: {MODEL_SAVE_PATH}")

torch.save(obj=model_1.state_dict(), # only saving the state_dict() only saves the models learned parameters

f=MODEL_SAVE_PATH)

Caricare il modello

# Instantiate a fresh instance of LinearRegressionModelV2

loaded_model_1 = LinearRegressionModelV2()

# Load model state dict

loaded_model_1.load_state_dict(torch.load(MODEL_SAVE_PATH))

# Put model to target device (if your data is on GPU, model will have to be on GPU to make predictions)

loaded_model_1.to(device)

print(f"Loaded model:\n{loaded_model_1}")

print(f"Model on device:\n{next(loaded_model_1.parameters()).device}")

testare il modello caricato

# Evaluate loaded model

loaded_model_1.eval()

with torch.inference_mode():

loaded_model_1_preds = loaded_model_1(X_test)

y_preds == loaded_model_1_preds

>tensor([[True],

[True],

[True],

[True],

[True],

[True],

[True],

[True],

[True],

[True]], device='cuda:0')

Classificazione (binary classification)

Introduzione

In questa lezione andremo a vedere la classificazione in base a delle tipolgie di dati, differisce quindi dalla regressione che si basa sulla predizione di un valore numero.

La classificazione può essere "binaria" es. cats vs dogs, oppure multiclass classification se abbiamo più di due tipologie da classificare.

Di seguito alcuni esempi di classificazione:

Cosa andreamo a trattare nel coso:

| **Topic** | **Contents** |

|---|---|

| **0. Architecture of a classification neural network** | Neural networks can come in almost any shape or size, but they typically follow a similar floor plan. |

| **1. Getting binary classification data ready** | Data can be almost anything but to get started we're going to create a simple binary classification dataset. |

| **2. Building a PyTorch classification model** | Here we'll create a model to learn patterns in the data, we'll also choose a **loss function**, **optimizer** and build a **training loop** specific to classification. |

| **3. Fitting the model to data (training)** | We've got data and a model, now let's let the model (try to) find patterns in the (**training**) data. |

| **4. Making predictions and evaluating a model (inference)** | Our model's found patterns in the data, let's compare its findings to the actual (**testing**) data. |

| **5. Improving a model (from a model perspective)** | We've trained an evaluated a model but it's not working, let's try a few things to improve it. |

| **6. Non-linearity** | So far our model has only had the ability to model straight lines, what about non-linear (non-straight) lines? |

| **7. Replicating non-linear functions** | We used **non-linear functions** to help model non-linear data, but what do these look like? |

| **8. Putting it all together with multi-class classification** | Let's put everything we've done so far for binary classification together with a multi-class classification problem. |

Partiamo con un esempio di classificazione basato su due serie di cerchi che si annidano tra di loro. Utilizziamo sklearn per ottenere questo set di dati:

from sklearn.datasets import make_circles

Make 1000 samples

n_samples = 1000

X, y = make_circles(n_samples,

noise=0.03, # a little bit of noise to the dots

random_state=42) # keep random state so we get the same valuesCreate circles

proviamo a vedere cosa contengono le X e le y.

print(f"First 5 X features:\n{X[:5]}")

print(f"\nFirst 5 y labels:\n{y[:5]}")

First 5 X features: [[ 0.75424625 0.23148074] [-0.75615888 0.15325888] [-0.81539193 0.17328203] [-0.39373073 0.69288277] [ 0.44220765 -0.89672343]]

First 5 y labels:[1 1 1 1 0]

quindi le X contengono delle coordinate metre le y si suddividono in valori zero e uno. Quindi siamo di fronte ad una classificazione binaria, ma vediamola graficamente:

import matplotlib.pyplot as plt

plt.scatter(x=X[:, 0],

y=X[:, 1],

c=y,

cmap=plt.cm.RdYlBu);

Quindi riassimento le X contengo le coordinate del cerchio, mentre le y il colore. Dalla figura si vede che i cerchi sono suffidivisi in due macrogruppi posizionati uno all'interno dell'altro.

Vediamo le shape:

# Check the shapes of our features and labels

X.shape, y.shape

((1000, 2), (1000,))

X ha una shape di due, mentre le y non ha uno shape in quanto è uno scalare di un valore.

Ora converiamo da numpy a tensori

# Turn data into tensors

# Otherwise this causes issues with computations later on

import torch

X = torch.from_numpy(X).type(torch.float)

y = torch.from_numpy(y).type(torch.float)

View the first five samples

print (X[:5], y[:5])

(tensor([[ 0.7542, 0.2315],[-0.7562, 0.1533],[-0.8154, 0.1733],[-0.3937, 0.6929],[ 0.4422, -0.8967]]),tensor([1., 1., 1., 1., 0.]))

lo converiamo in float32 (float) perchè numpy è in float64

splittiamo i dati in training e test

# Split data into train and test sets

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # make the random splitLa funziona "train_test_split" splitta le featurues e le label per noi. :)

Bene, ora costruiamo il modello:

# Standard PyTorch imports

import torch

from torch import nn

# Make device agnostic code

device = "cuda" if torch.cuda.is_available() else "cpu" deviceConstruct a model class that subclasses nn.Module

class CircleModelV0(nn.Module):

def init(self):

super().init()

# 2. Create 2 nn.Linear layers capable of handling X and y input and output shapes

self.layer_1 = nn.Linear(in_features=2, out_features=5)

# takes in 2 features (X), produces 5 features

self.layer_2 = nn.Linear(in_features=5, out_features=1) # takes in 5 features, produces 1 feature (y)

# 3. Define a forward method containing the forward pass computation

def forward(self, x):

# Return the output of layer_2, a single feature, the same shape as y

return self.layer_2(self.layer_1(x)) # computation goes through layer_1 first then the output of layer_1 goes through layer_2

Create an instance of the model and send it to target device

model_0 = CircleModelV0().to(device)model_0

NB: una regola per settare il numero di feautres in input è fallo coincidere con le features del dataset. Idem per le features di output.

esiste inoltre un altro modo per rappresentare il modello in stile "Tensorflow", es:

# costruisco il modello

model_0 = nn.Sequential(

nn.Linear(in_features=2, out_features=6),

nn.Linear(in_features=6, out_features=2),

nn.Linear(in_features=2, out_features=1)

).to(device)model_0

Questo tipo di definizione del modello è "limitato" dal fatto che è sequenziale e quindi meno flessibile rispetto a reti più articolate.

Il modello può essere rappresentato graficamente come sotto riportato:

ora, prima di fare il training del modello proviamo a passare i dati di test per vedere che output viene generato. (ovviamente essendo un modello non "allenato" saranno dati casuali)

# Make predictions with the model

with torch.inference_mode():

untrained_preds = model_0(X_test.to(device))

print(f"Length of predictions: {len(untrained_preds)}, Shape: {untrained_preds.shape}")

print(f"Length of test samples: {len(y_test)}, Shape: {y_test.shape}")

print(f"\nFirst 10 predictions:\n{untrained_preds[:10]}")

print(f"\nFirst 10 test labels:\n{y_test[:10]}")

Length of predictions: 200, Shape: torch.Size([200, 1]) Length of test samples: 200, Shape: torch.Size([200])

First 10 predictions: tensor([[-0.7534], [-0.6841], [-0.7949], [-0.7423], [-0.5721], [-0.5315], [-0.5128], [-0.4765], [-0.8042], [-0.6770]], device='cuda:0', grad_fn=<SliceBackward0>)

First 10 test labels:tensor([1., 0., 1., 0., 1., 1., 0., 0., 1., 0.])

Possiamo notare che che l'output non è zero oppure uno come invce sono le labels... come mai? lo vedremo più avanti...

Prima di fare il training settiamo la "loss function" e "l'optimizer".

Setup loss function and optimizer

La domanda che ci si pone di sempre quale loss function e optimizer utilzzare?

Per la classfificazione in genere si utilizza la binary cross entropy, vedi tabella esempio sotto ripotata:

| Loss function/Optimizer | Problem type | PyTorch Code |

|---|---|---|

| Stochastic Gradient Descent (SGD) optimizer | Classification, regression, many others. | torch.optim.SGD()(https://pytorch.org/docs/stable/generated/torch.optim.SGD.html) |

| Adam Optimizer | Classification, regression, many others. |

torch.optim.Adam() `https://pytorch.org/docs/stable/generated/torch.optim.Adam.html) |

| Binary cross entropy loss | Binary classification |

torch.nn.BCELossWithLogits( https://pytorch.org/docs/stable/generated/torch.nn.BCEWithLogitsLoss.html) or [`torch.nn.BCELoss`](https://pytorch.org/docs/stable/generated/torch.nn.BCELoss.html) |

| Cross entropy loss | Mutli-class classification | [`torch.nn.CrossEntropyLoss`](https://pytorch.org/docs/stable/generated/torch.nn.CrossEntropyLoss.html) |

| Mean absolute error (MAE) or L1 Loss | Regression | [`torch.nn.L1Loss`](https://pytorch.org/docs/stable/generated/torch.nn.L1Loss.html) |

| Mean squared error (MSE) or L2 Loss | Regression | [`torch.nn.MSELoss`](https://pytorch.org/docs/stable/generated/torch.nn.MSELoss.html#torch.nn.MSELoss) |

Riassumento la loss function misura quanto il modello si distanzia dai valori attesi.

Mentre per gli optimizer servono per migliorare il modello che poi attraverso la loss funzion verrà valutato.

In genere si utilizza SGD o Adam..

Ok creiamo la loss e l'optimizer:

# Create a loss function

# loss_fn = nn.BCELoss() # BCELoss = no sigmoid built-in

loss_fn = nn.BCEWithLogitsLoss() # BCEWithLogitsLoss = sigmoid built-in

Create an optimizer

optimizer = torch.optim.SGD(params=model_0.parameters(), lr=0.1)

Accuracy e Loss function

Definiamo anche il concetto di "accuracy".

La loss functuon misura quanto le preduzioni si allontanano dai valori desierati, mentre la Accuracy indica la percentuale con la quale il modello fa delle previsioni corrette. La differenza è sottile, e in questo momento non mi è chiara, credo che l'accuracy dipenda dalla loss e che indichi con una percentuale quello che la loss esprime in valori numerici specifici per il modello. Ad ogni modo vengono utilizzate entramb le misure per verificare la buona qualità del modello.

Implementiamo la accuracy

# Calculate accuracy (a classification metric)

def accuracy_fn(y_true, y_pred):

correct = torch.eq(y_true, y_pred).sum().item() # torch.eq() calculates where two tensors are equal

acc = (correct / len(y_pred)) * 100

return acc

Logits

I logits rappresentano l'output "grezzo" del modello. I logits devono essere convertiti nella previsione probabilistica passandoli ad una "funzione di attivazione". (es. sigmoid per la "binari cross entropy", softmax per la multiclass classificazion) Per noi essere "discretizzati" (i valori probabilistici) mediante l'uso di funzioni come "round".

Vediamo quindi come rivedere la fase di training in funzione dei logits. NB per capire la rappresentazione dei logits vedi il commento nel training loop.

for epoch in range(epochs):

### Training

# 0. imposto la modalità in Training (da fare ad ogni epoca)

model_0.train()

# 1. calcolo l'output con i parametri del modello, NB devo gare la "squeeze" percheè va ritdotta di una dimensione

# quanto l'output del modello ne aggiunge una.

# I logits sono i valori "grezzi" che, nella caso delle classificazioni BINARIE, NON possono essere comparati

# con i valori discreti 0/1 delle t_test.

# I logits quindive dobranno essere convertiti attraverso le funzioni come per la esempio la sigmoing, che

# non fa altro che ricondurli a valori compresi tra zero e uno che, poi andranno "discretizzati" a 0/1 atttraverso

# l'uso di funzioni di arrotondamento come per es. la round.

y_logits = model_0(X_train).squeeze() #

# pred. logits -> pred. probabilities -> labels 0/1

y_pred = torch.round(torch.sigmoid(y_logits))

# 2. calculate loss/accuracy

# calcolo la loss, da nota che viene utilizzata come loss function la "BCEWithLogitsLoss" che vuole in input

# dirattamente i logits anzichè i valori predetti, in quanto gli applica la sigmoid e la round in automatico

# per poi paragonli con le y_train "discrete".

loss = loss_fn(y_logits, y_train) # nn.BCEWithLogitsLoss()

# calcololiamo anche la percentuale di accuratezza.

acc = accuracy_fn(y_true=y_train, y_pred=y_pred)

# 3. reinizializzo l'optimizer in quanto tende ad accumulare i valori

optimizer.zero_grad()

# 4. effettua la back propagation, nella pratica Pytorch tiene traccia dei valori associati alla discesa del gradiente

# Quindi calcola la derivata parziale per determinare il minimo della curva dei delta tra valori predetti e valori di test

loss.backward()

# 5. ottimizza i parametri (una sola volta) e in base al valore "lr".

# NB: cambia quindi i valori dei tensori per cercare di farli avvicinare ai valori ottimali

optimizer.step()

### Testing (in questa fase vengono passati i valori non trainati di test)

# indico a Pytrch che la fase di training è terminata e che ora devo valutare i parametri e paragonarli con i valori attesi

model_0.eval()

with torch.inference_mode(): # disabilito la fase di training

test_logits = model_0(X_test).squeeze() #

# pred. logits -> pred. probabilities -> labels 0/1

test_pred = torch.round(torch.sigmoid(test_logits))

# per poi paragonli con le y_train "discrete".

test_loss = loss_fn(test_logits, y_test) # nn.BCEWithLogitsLoss()

# calcololiamo anche la percentuale di accuratezza.

test_acc = accuracy_fn(y_true=y_test, y_pred=test_pred)

# Print out what's happening

if epoch % 10 == 0:

print(f"Epoch: {epoch} | Train -> Loss: {loss:.5f} , Acc: {acc:.2f}% | Test -> Loss: {test_loss:.5f}%. Acc: {test_acc:.2f}% ")L'output della funzione sarà:

Python 3.10.8 | packaged by conda-forge | (main, Nov 24 2022, 14:07:00) [MSC v.1916 64 bit (AMD64)]

Type 'copyright', 'credits' or 'license' for more information

IPython 8.7.0 -- An enhanced Interactive Python. Type '?' for help.

PyDev console: using IPython 8.7.0

Python 3.10.8 | packaged by conda-forge | (main, Nov 24 2022, 14:07:00) [MSC v.1916 64 bit (AMD64)] on win32

runfile('C:\\lavori\\formazione_py\\src\\formazione\\DanielBourkePytorch\\02_classification.py', wdir='C:\\lavori\\formazione_py\\src\\formazione\\DanielBourkePytorch')

Epoch: 0 | Train -> Loss: 0.70155 , Acc: 50.00% | Test -> Loss: 0.70146%. Acc: 50.00%

Epoch: 10 | Train -> Loss: 0.69617 , Acc: 57.50% | Test -> Loss: 0.69654%. Acc: 55.50%

Epoch: 20 | Train -> Loss: 0.69453 , Acc: 51.75% | Test -> Loss: 0.69501%. Acc: 54.50%

Epoch: 30 | Train -> Loss: 0.69395 , Acc: 50.38% | Test -> Loss: 0.69448%. Acc: 53.50%

Epoch: 40 | Train -> Loss: 0.69370 , Acc: 49.50% | Test -> Loss: 0.69427%. Acc: 53.50%

Epoch: 50 | Train -> Loss: 0.69358 , Acc: 49.50% | Test -> Loss: 0.69417%. Acc: 53.00%

Epoch: 60 | Train -> Loss: 0.69349 , Acc: 49.88% | Test -> Loss: 0.69412%. Acc: 52.00%

Epoch: 70 | Train -> Loss: 0.69343 , Acc: 49.62% | Test -> Loss: 0.69409%. Acc: 51.50%

Epoch: 80 | Train -> Loss: 0.69337 , Acc: 49.25% | Test -> Loss: 0.69408%. Acc: 51.50%

Epoch: 90 | Train -> Loss: 0.69333 , Acc: 49.62% | Test -> Loss: 0.69407%. Acc: 51.50%

Backend MacOSX is interactive backend. Turning interactive mode on.che è pessimo in quanto il modello utilizza un "linear model" che sostanzialmente rappresenta una linea che negli assi cartesiani ha un'intercetta e una direzione e quindi non riscurà mai a rappresentare i dati.

Bisoga quindi cambiare modello.

In particolare bisogna introdurre una funziona non lineare come per es. la ReLU che nella prarica ritorna zero se i valori sono <=0 oppure il valore stesso se >0.

Di seguito il grafico della funzione non lineare ReLU.

Modifichiamo quindi il modello aggiungendo dopo l'hidden layer la funzione di attivazione non lineare come nell'esempio di seguito:

# costruisco il modello

model_0 = nn.Sequential(

nn.Linear(in_features=2, out_features=10),

nn.ReLU(),

nn.Linear(in_features=10, out_features=10),

nn.ReLU(),

nn.Linear(in_features=10, out_features=1))che produce risultati decisamente migliori:

Epoch: 0 | Train -> Loss: 0.69656 , Acc: 47.38% | Test -> Loss: 0.69921%. Acc: 46.00%

Epoch: 10 | Train -> Loss: 0.69417 , Acc: 46.00% | Test -> Loss: 0.69735%. Acc: 43.00%

Epoch: 20 | Train -> Loss: 0.69257 , Acc: 49.62% | Test -> Loss: 0.69603%. Acc: 49.50%

Epoch: 30 | Train -> Loss: 0.69123 , Acc: 50.38% | Test -> Loss: 0.69486%. Acc: 48.50%

Epoch: 40 | Train -> Loss: 0.69000 , Acc: 51.00% | Test -> Loss: 0.69374%. Acc: 49.50%

Epoch: 50 | Train -> Loss: 0.68884 , Acc: 51.50% | Test -> Loss: 0.69266%. Acc: 49.50%

Epoch: 60 | Train -> Loss: 0.68772 , Acc: 52.62% | Test -> Loss: 0.69162%. Acc: 48.50%

Epoch: 70 | Train -> Loss: 0.68663 , Acc: 53.00% | Test -> Loss: 0.69060%. Acc: 49.00%

Epoch: 80 | Train -> Loss: 0.68557 , Acc: 53.25% | Test -> Loss: 0.68960%. Acc: 48.50%

Epoch: 90 | Train -> Loss: 0.68453 , Acc: 53.25% | Test -> Loss: 0.68862%. Acc: 48.50%

Epoch: 100 | Train -> Loss: 0.68349 , Acc: 54.12% | Test -> Loss: 0.68765%. Acc: 49.00%

Epoch: 110 | Train -> Loss: 0.68246 , Acc: 54.37% | Test -> Loss: 0.68670%. Acc: 48.50%

Epoch: 120 | Train -> Loss: 0.68143 , Acc: 54.87% | Test -> Loss: 0.68574%. Acc: 49.00%

Epoch: 130 | Train -> Loss: 0.68039 , Acc: 54.75% | Test -> Loss: 0.68478%. Acc: 49.00%

Epoch: 140 | Train -> Loss: 0.67935 , Acc: 55.50% | Test -> Loss: 0.68382%. Acc: 50.00%

Epoch: 150 | Train -> Loss: 0.67829 , Acc: 55.62% | Test -> Loss: 0.68285%. Acc: 50.50%

Epoch: 160 | Train -> Loss: 0.67722 , Acc: 57.25% | Test -> Loss: 0.68188%. Acc: 53.50%

Epoch: 170 | Train -> Loss: 0.67614 , Acc: 59.62% | Test -> Loss: 0.68090%. Acc: 57.50%

Epoch: 180 | Train -> Loss: 0.67504 , Acc: 61.62% | Test -> Loss: 0.67991%. Acc: 59.00%

Epoch: 190 | Train -> Loss: 0.67390 , Acc: 63.75% | Test -> Loss: 0.67891%. Acc: 59.50%

Epoch: 200 | Train -> Loss: 0.67275 , Acc: 65.50% | Test -> Loss: 0.67789%. Acc: 60.50%

Epoch: 210 | Train -> Loss: 0.67156 , Acc: 66.50% | Test -> Loss: 0.67686%. Acc: 60.50%

Epoch: 220 | Train -> Loss: 0.67036 , Acc: 68.62% | Test -> Loss: 0.67580%. Acc: 63.50%

Epoch: 230 | Train -> Loss: 0.66912 , Acc: 70.75% | Test -> Loss: 0.67473%. Acc: 64.50%

Epoch: 240 | Train -> Loss: 0.66787 , Acc: 72.00% | Test -> Loss: 0.67363%. Acc: 66.00%

Epoch: 250 | Train -> Loss: 0.66658 , Acc: 73.75% | Test -> Loss: 0.67252%. Acc: 67.50%

Epoch: 260 | Train -> Loss: 0.66526 , Acc: 74.88% | Test -> Loss: 0.67139%. Acc: 69.00%

Epoch: 270 | Train -> Loss: 0.66392 , Acc: 75.75% | Test -> Loss: 0.67025%. Acc: 69.50%

Epoch: 280 | Train -> Loss: 0.66256 , Acc: 77.62% | Test -> Loss: 0.66909%. Acc: 72.00%

Epoch: 290 | Train -> Loss: 0.66118 , Acc: 78.75% | Test -> Loss: 0.66791%. Acc: 72.50%

Epoch: 300 | Train -> Loss: 0.65978 , Acc: 79.75% | Test -> Loss: 0.66672%. Acc: 75.50%

Epoch: 310 | Train -> Loss: 0.65835 , Acc: 80.75% | Test -> Loss: 0.66552%. Acc: 76.00%

Epoch: 320 | Train -> Loss: 0.65689 , Acc: 81.88% | Test -> Loss: 0.66431%. Acc: 77.00%

Epoch: 330 | Train -> Loss: 0.65540 , Acc: 82.75% | Test -> Loss: 0.66309%. Acc: 77.50%

Epoch: 340 | Train -> Loss: 0.65390 , Acc: 84.38% | Test -> Loss: 0.66183%. Acc: 78.50%

Epoch: 350 | Train -> Loss: 0.65237 , Acc: 85.12% | Test -> Loss: 0.66056%. Acc: 78.50%

Epoch: 360 | Train -> Loss: 0.65083 , Acc: 85.25% | Test -> Loss: 0.65927%. Acc: 81.00%

Epoch: 370 | Train -> Loss: 0.64925 , Acc: 85.88% | Test -> Loss: 0.65797%. Acc: 81.50%

Epoch: 380 | Train -> Loss: 0.64763 , Acc: 86.38% | Test -> Loss: 0.65664%. Acc: 83.00%

Epoch: 390 | Train -> Loss: 0.64599 , Acc: 87.00% | Test -> Loss: 0.65530%. Acc: 83.50%

Epoch: 400 | Train -> Loss: 0.64430 , Acc: 87.38% | Test -> Loss: 0.65394%. Acc: 84.50%

Epoch: 410 | Train -> Loss: 0.64258 , Acc: 88.75% | Test -> Loss: 0.65256%. Acc: 85.00%

Epoch: 420 | Train -> Loss: 0.64083 , Acc: 89.50% | Test -> Loss: 0.65115%. Acc: 86.00%

Epoch: 430 | Train -> Loss: 0.63904 , Acc: 89.62% | Test -> Loss: 0.64971%. Acc: 86.50%

Epoch: 440 | Train -> Loss: 0.63723 , Acc: 90.75% | Test -> Loss: 0.64825%. Acc: 87.00%

Epoch: 450 | Train -> Loss: 0.63540 , Acc: 91.38% | Test -> Loss: 0.64678%. Acc: 87.00%

Epoch: 460 | Train -> Loss: 0.63354 , Acc: 92.38% | Test -> Loss: 0.64529%. Acc: 87.00%

Epoch: 470 | Train -> Loss: 0.63165 , Acc: 93.00% | Test -> Loss: 0.64377%. Acc: 88.00%

Epoch: 480 | Train -> Loss: 0.62974 , Acc: 93.38% | Test -> Loss: 0.64222%. Acc: 89.00%

Epoch: 490 | Train -> Loss: 0.62780 , Acc: 93.50% | Test -> Loss: 0.64065%. Acc: 91.00%

Epoch: 500 | Train -> Loss: 0.62585 , Acc: 94.38% | Test -> Loss: 0.63905%. Acc: 91.50%

Epoch: 510 | Train -> Loss: 0.62386 , Acc: 94.75% | Test -> Loss: 0.63746%. Acc: 92.50%

Epoch: 520 | Train -> Loss: 0.62183 , Acc: 95.25% | Test -> Loss: 0.63584%. Acc: 92.50%

Epoch: 530 | Train -> Loss: 0.61979 , Acc: 95.50% | Test -> Loss: 0.63421%. Acc: 92.50%

Epoch: 540 | Train -> Loss: 0.61773 , Acc: 95.75% | Test -> Loss: 0.63255%. Acc: 92.50%

Epoch: 550 | Train -> Loss: 0.61564 , Acc: 95.62% | Test -> Loss: 0.63088%. Acc: 93.00%

Epoch: 560 | Train -> Loss: 0.61351 , Acc: 96.00% | Test -> Loss: 0.62917%. Acc: 93.50%

Epoch: 570 | Train -> Loss: 0.61136 , Acc: 96.00% | Test -> Loss: 0.62742%. Acc: 94.00%

Epoch: 580 | Train -> Loss: 0.60919 , Acc: 96.12% | Test -> Loss: 0.62565%. Acc: 94.50%

Epoch: 590 | Train -> Loss: 0.60699 , Acc: 96.00% | Test -> Loss: 0.62385%. Acc: 94.50%

Epoch: 600 | Train -> Loss: 0.60477 , Acc: 96.50% | Test -> Loss: 0.62203%. Acc: 94.50%

Epoch: 610 | Train -> Loss: 0.60253 , Acc: 96.50% | Test -> Loss: 0.62020%. Acc: 94.00%

Epoch: 620 | Train -> Loss: 0.60026 , Acc: 96.75% | Test -> Loss: 0.61833%. Acc: 94.00%

Epoch: 630 | Train -> Loss: 0.59796 , Acc: 97.00% | Test -> Loss: 0.61643%. Acc: 94.50%

Epoch: 640 | Train -> Loss: 0.59563 , Acc: 97.25% | Test -> Loss: 0.61449%. Acc: 94.50%

Epoch: 650 | Train -> Loss: 0.59327 , Acc: 97.38% | Test -> Loss: 0.61253%. Acc: 94.50%

Epoch: 660 | Train -> Loss: 0.59086 , Acc: 97.62% | Test -> Loss: 0.61053%. Acc: 94.50%

Epoch: 670 | Train -> Loss: 0.58843 , Acc: 97.62% | Test -> Loss: 0.60850%. Acc: 94.00%

Epoch: 680 | Train -> Loss: 0.58595 , Acc: 97.88% | Test -> Loss: 0.60642%. Acc: 95.00%

Epoch: 690 | Train -> Loss: 0.58343 , Acc: 97.88% | Test -> Loss: 0.60429%. Acc: 95.50%

Epoch: 700 | Train -> Loss: 0.58088 , Acc: 97.88% | Test -> Loss: 0.60211%. Acc: 95.50%

Epoch: 710 | Train -> Loss: 0.57830 , Acc: 98.00% | Test -> Loss: 0.59991%. Acc: 96.00%

Epoch: 720 | Train -> Loss: 0.57569 , Acc: 98.25% | Test -> Loss: 0.59767%. Acc: 96.50%

Epoch: 730 | Train -> Loss: 0.57305 , Acc: 98.38% | Test -> Loss: 0.59541%. Acc: 96.50%

Epoch: 740 | Train -> Loss: 0.57037 , Acc: 98.50% | Test -> Loss: 0.59309%. Acc: 96.50%

Epoch: 750 | Train -> Loss: 0.56766 , Acc: 98.50% | Test -> Loss: 0.59073%. Acc: 96.50%

Epoch: 760 | Train -> Loss: 0.56493 , Acc: 98.62% | Test -> Loss: 0.58835%. Acc: 97.00%

Epoch: 770 | Train -> Loss: 0.56216 , Acc: 98.62% | Test -> Loss: 0.58594%. Acc: 97.00%

Epoch: 780 | Train -> Loss: 0.55935 , Acc: 98.62% | Test -> Loss: 0.58352%. Acc: 97.00%

Epoch: 790 | Train -> Loss: 0.55652 , Acc: 98.88% | Test -> Loss: 0.58106%. Acc: 97.00%

Epoch: 800 | Train -> Loss: 0.55365 , Acc: 98.88% | Test -> Loss: 0.57855%. Acc: 97.00%

Epoch: 810 | Train -> Loss: 0.55075 , Acc: 98.88% | Test -> Loss: 0.57604%. Acc: 97.00%

Epoch: 820 | Train -> Loss: 0.54782 , Acc: 98.88% | Test -> Loss: 0.57351%. Acc: 97.00%

Epoch: 830 | Train -> Loss: 0.54485 , Acc: 99.00% | Test -> Loss: 0.57095%. Acc: 97.00%

Epoch: 840 | Train -> Loss: 0.54185 , Acc: 99.00% | Test -> Loss: 0.56836%. Acc: 97.00%

Epoch: 850 | Train -> Loss: 0.53884 , Acc: 99.00% | Test -> Loss: 0.56572%. Acc: 97.50%

Epoch: 860 | Train -> Loss: 0.53580 , Acc: 99.00% | Test -> Loss: 0.56307%. Acc: 97.50%

Epoch: 870 | Train -> Loss: 0.53274 , Acc: 99.00% | Test -> Loss: 0.56040%. Acc: 97.50%

Epoch: 880 | Train -> Loss: 0.52964 , Acc: 99.00% | Test -> Loss: 0.55773%. Acc: 97.50%

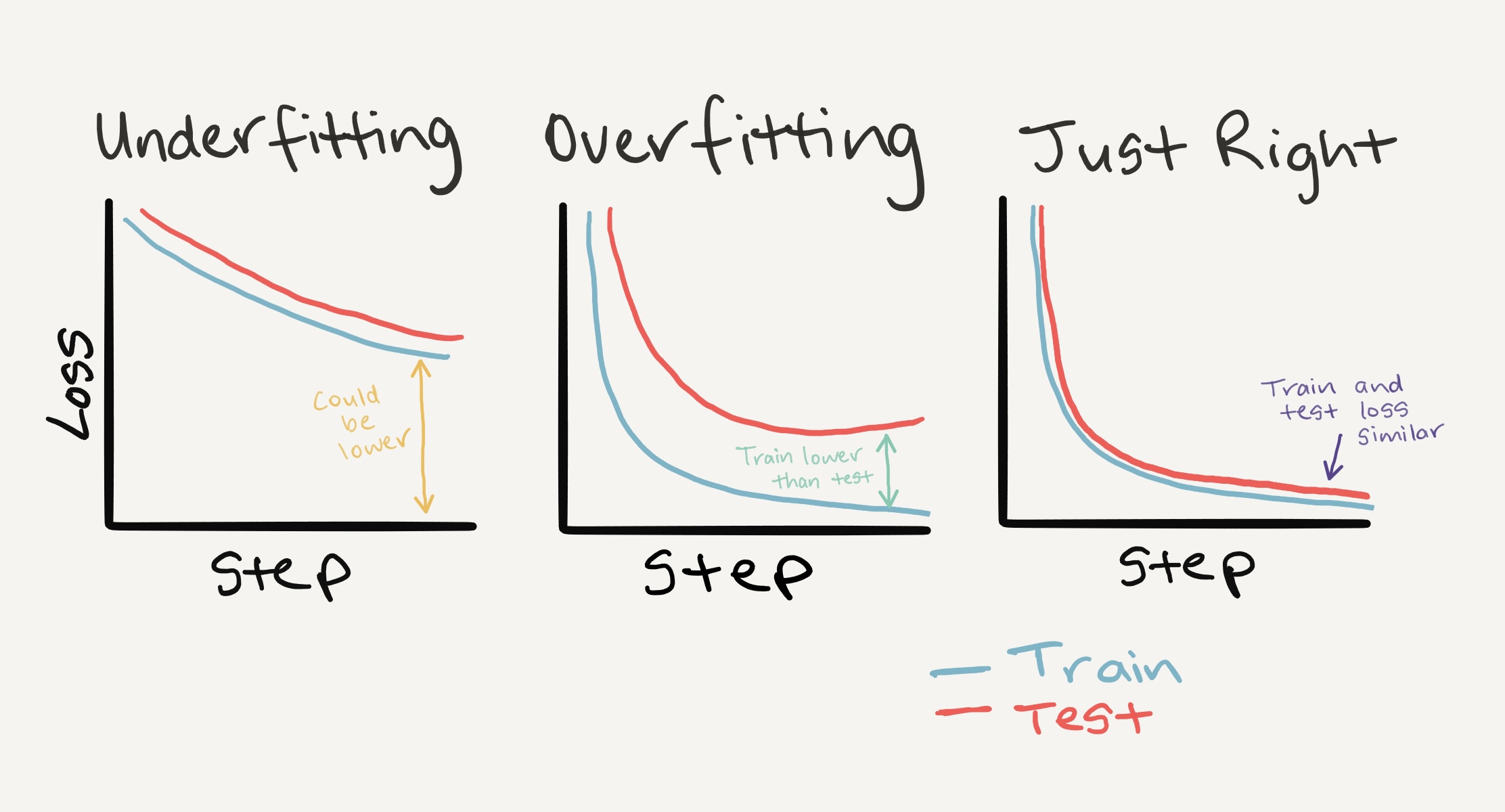

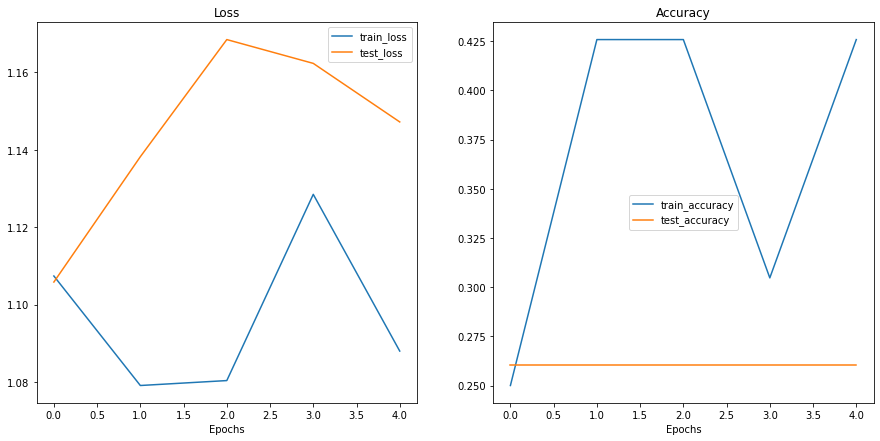

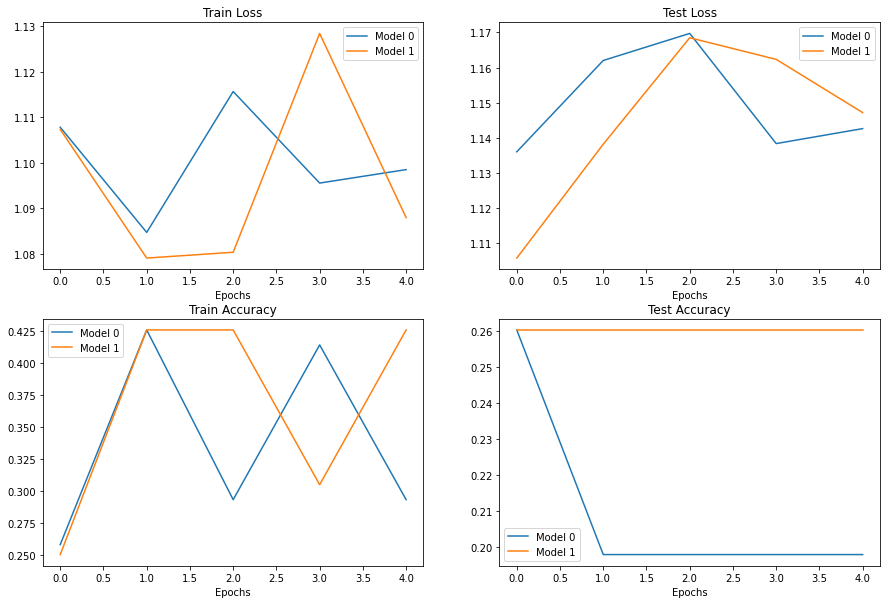

Epoch: 890 | Train -> Loss: 0.52652 , Acc: 99.12% | Test -> Loss: 0.55503%. Acc: 97.50%